Our Approach

Context

Before you read this, start with the summary of the project. To understand the terminology, its also recommended to read our taxonomy, which is applicable for everything we do.

Most modern software applications (think Slack, Notion, Google Drive) consists of many components. It could be argued that there is no software anymore that does not have some dependency on some open-source component.

To take the example of our very own website here are some examples of the components that have been used to build it:

- Gatsby (the overarching framework to construct the website with)

- Tailwind CSS (a library to build fast design/frontend)

- React (is used by Gatsby)

We also use a third-party service to make the website editable - a content management system - called Prismic.

An important fact to note: The actual code of the website is maybe ~1%, the majority of the logic & heavy work is done by the libraries above which represent ~99% of the total code of the website.

Now if we want to improve the energy-usage of the development of the website - where do we start? We start with the ~99% obviously, with the components.

When building the website - was energy-efficiency taken into account when selecting the components? No, performance, ease-of-use and an active maintenance community were the key requirements when selecting the components. Would it have been possible to select components based on energy-efficiency?

To take an example, when looking at the Gatsby website or the Gatsby code repository, there is indicator that would inform decision-making in regards to energy-efficiency. One could argue that a slim, high-performing component is also energy-efficient, however it’s not fact-based and there is no public evidence to support it.

How to measure?

How do you validate the efficiency of a car engine if you don’t have a car around it? You test in it in a laboratory and you test it based on real-life scenarios.

Software components do the same, because if they break, they might pull millions of applications down with them (or expose a security issue as happened with Log4J). So each component has hundreds, sometimes thousands of automated tests, which are executed whenever a change is made to the code of the component. The tests verify that the functionality is intact and meets the requirements defined by the specification (API).

If we wanted to measure the energy-efficiency of a component, in principle, we would have to build a software application around it (the car), to then perform tests. This is what the Oeko Institut in collaboration with the Birkenfeld Campus did in the previous work. Now this hard to scale, especially for > 10 Million software components out there.

So why not use the test suites that already come with each component? Measuring the energy use during the execution does not lead us to a comparable result (if you have the two components with the same functionality, but a different test approach or test coverage, the results will not be comparable or someone might just delete tests to improve the measurement), however it makes it scalable and repeatable. Most importantly, it gives the developer of the component a clear metric & indicator to work against - if energy-use is to be improved, the metric should go down.

To summarize: Our approach to the energy measurement of a software component is to measure the total energy consumed during the execution of its test suite, which executes the majority of the code that would also be executed in a real application.

When to measure?

When developing a software component using a test-driven approach (which is the common), there is two moment when the tests are being executed, which serve different purposes:

- During the development process, locally on the computer of the developer. Often running automatically upon a file change (see example below)

- Upon ‘checking in’ of the code, meaning when it’s uploaded to the shared code repository (think of it as a shared folder with a versioning history if you are not familiar with repositories), all tests are run to ensure that the committed (’uploaded’) change of the developer does not break anything else in the application.

Measuring during CI/CD

The 2) part is most often run using a continuous integration/delivery service. Some of the most common, we have listed here. To simplify the effort required from component developers, it would be highly desirable if the CI/CD applications themselves would display the energy-use per test execution.

Developing the integrations with common continuous integration/delivery tools & platforms is a key part of the project.

Measuring locally

Measuring the energy-use on the local computer of the developer is more challenging, because the type of computer used can influence the energy-use of the component. This makes it difficult for development-teams ‘to work on the same problem’, if the problem looks different on each developer’s computer.

Therefore, most likely, the measurement locally has to be calibrated first, determining the computing-efficiency (e.g. performance per watt of energy) needs to be benchmarked to then calibrate the energy-measurement to the local computer.

As the time of writing, we have not yet identified the exact method of calibration, however, we have identified the need to calibrate and will develop an approach to this during the course of the project.

Displaying energy-use

To connect to the point we identified in the beginning - the ability for a developer to chose a more energy-efficient component - we also need to display this information as part of component’s documentation.

Further, we need to standardize the process of measuring energy across components, in order to ensure they all are based on the same measurement methodology.

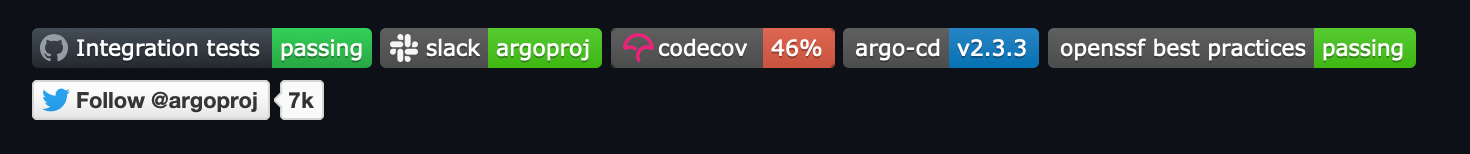

An approach to such display already exists, In the development community it’s very common to display ‘badges’ that display the various states of the component. In the example below, you can see that ArgoCD for example, currently has all of its tests ‘passing’ (meaning no issue was found), and that the tests cover 46% of all of lines of code, meaning that 54% of the code is not executed during the execution of the test suite.

As part of the SoftAWERE project, we want to evaluate further, if such a badge should and can exists for energy-use of the software component, to enable developers to consider energy-use as a selection criteria for picking a component.

Further we will explore how to develop an open standard that can be adopted by testing tools, CI/CD tools and enable the open-source community to continuously improve the methodology itself.

Reference CI/CD test systems

Further to standardization & facilitating the integration of the energy-measurement approach of SoftAWERE in test and CI/CD tools, we have the goal to make a reference system available to anyone developing open-source software components.

This reference systems allows developers to run the tests of their component on a calibrated & verified test server(s), e.g. using Gitlab as the CI/CD tool. This allows them to have a verified reference measurement that can be compared with their own CI/CD systems to validate that the measurements are correct.

This reference system should outlive the project and stay available for the foreseeable future. To minimize the environmental impact of that system, it shall be built using refurbished hardware and run in a data center that we consider environmentally-sustainable, e.g. using physical renewable energy or re-using the heat.